We present TextureDreamer, a novel image-guided texture synthesis method to transfer relightable textures from a small number of input images (3 to 5) to target 3D shapes across arbitrary categories.

Texture creation is a pivotal challenge in vision and graphics. Industrial companies hire experienced artists to manually craft textures for 3D assets. Classical methods require densely sampled views and accurately aligned geometry, while learning-based methods are confined to category-specific shapes within the dataset. In contrast, TextureDreamer can transfer highly detailed, intricate textures from real-world environments to arbitrary objects with only a few casually captured images, potentially significantly democratizing texture creation.

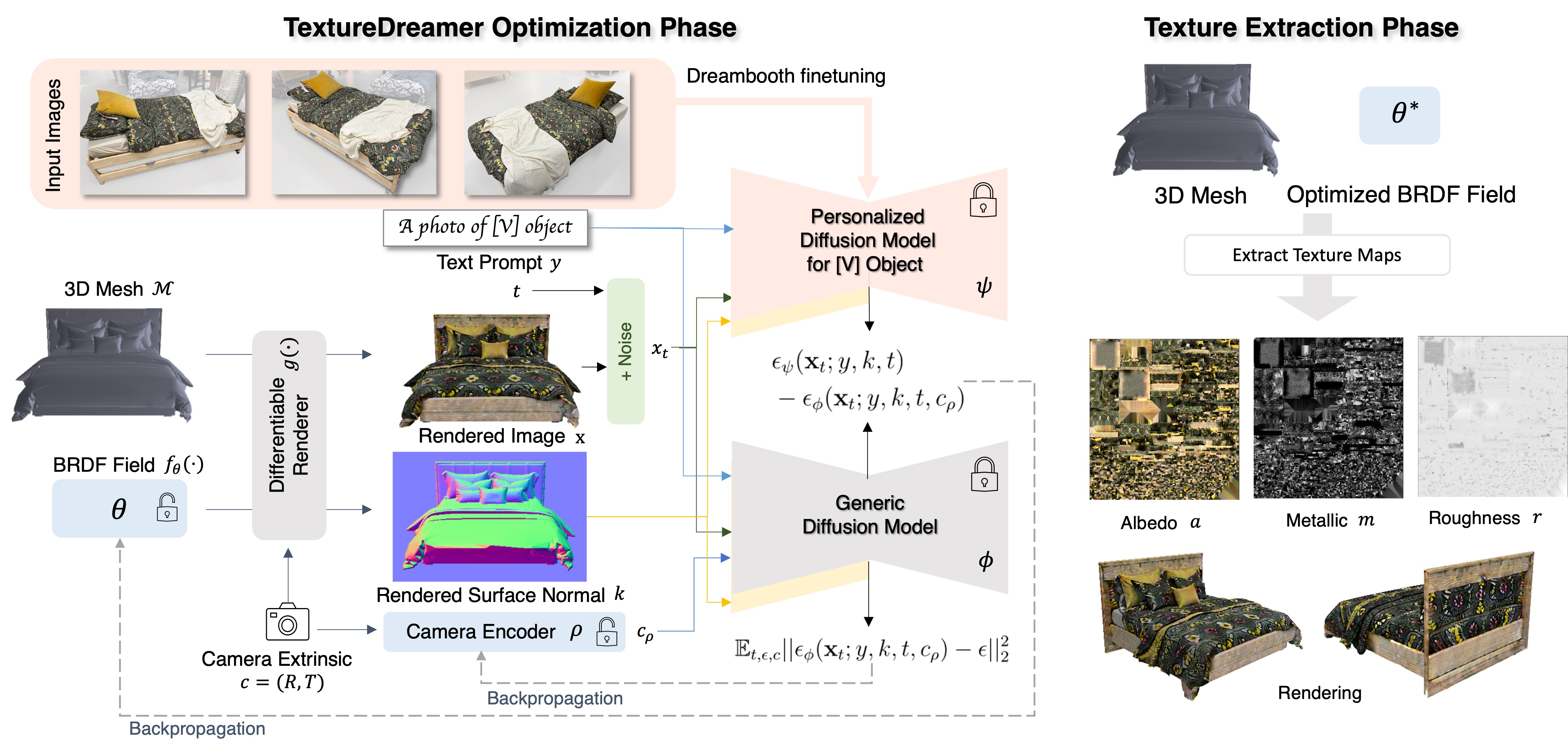

Our core idea, personalized geometry-aware score distillation (PGSD), draws inspiration from recent advancements in diffuse models, including personalized modeling for texture information extraction, variational score distillation for detailed appearance synthesis, and explicit geometry guidance with ControlNet. Our integration and several essential modifications substantially improve the texture quality. Experiments on real images spanning different categories show that TextureDreamer can successfully transfer highly realistic, semantic meaningful texture to arbitrary objects, surpassing the visual quality of previous state-of-the-art.

Given 3-5 images, we first obtain personalized diffusion model with Dreambooth finetuning. The spatially-varying bidirectional reflectance distribution (BRDF) field is then optimized through personalized geometric-aware score distillation (PGSD). After optimization finished, high-resolution texture maps corresponding to albedo, metallic, and roughness can be extracted from the optimized BRDF field.

We compare our method with Latent-Paint and TEXTURE.

Input Style

We compare our method with Latent-Paint and TEXTURE.

Input Style

We compare our method with Latent-Paint and TEXTURE.

Input Style

We compare our method with Latent-Paint and TEXTURE.

Input Style

Input Style

Input Style

Input Style

Our method can synthesize diverse patterns from the same set of images.

@article{yeh2024texturedreamer,

title={TextureDreamer: Image-guided Texture Synthesis through Geometry-aware Diffusion},

author={Yeh, Yu-Ying and Huang, Jia-Bin and Kim, Changil and Xiao, Lei and Nguyen-Phuoc, Thu and Khan, Numair and Zhang, Cheng and Chandraker, Manmohan and Marshall, Carl S and Dong, Zhao and others},

journal={arXiv preprint arXiv:2401.09416},

year={2024}

}